Dr. Zhigang Zhu, a Professor at the CCNY Department of Computer Science who specializes in computer vision and assistive technology, is a researcher in a CUNY-led consortium developing assistive technology to update existing infrastructure.

Dr. Zhu’s research was originally centered around computer vision and multimodal sensors, but he gradually shifted from robotics applications to assistive technology. The latter is now the main focus of his research in the Visual Computing Lab he directs, and his work as a co-director of the CCNY Master’s Program in Data Science and Engineering and professor of a computer science capstone for undergraduate seniors on assistive technology.

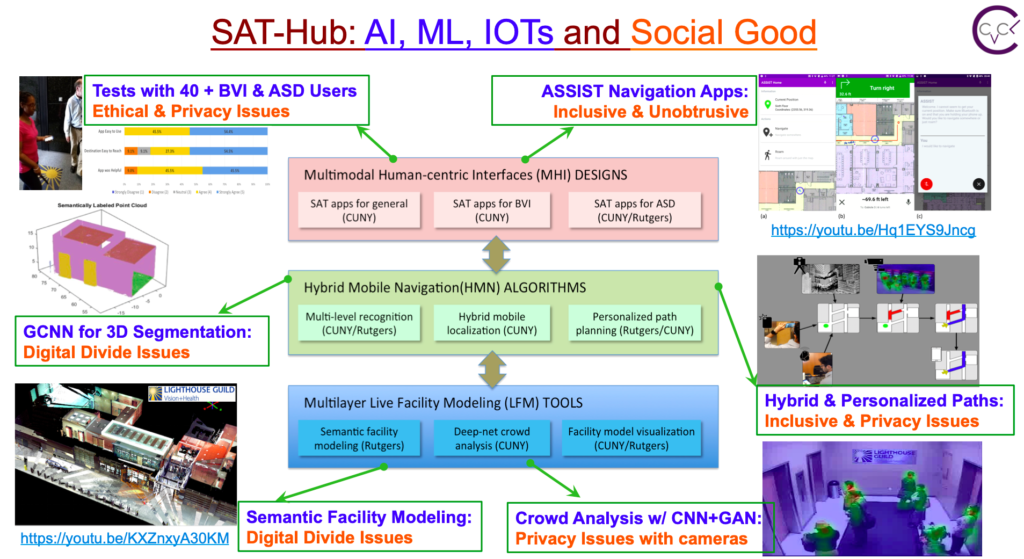

Dr. Zhu is working on the Smart and Accessible Transportation Hub (SAT-Hub), a joint project between CUNY, Rutgers University, Bentley Systems, Inc., and the Lighthouse Guild. Through the partnership, Dr. Zhu and other AI and high-performance computing and transportation experts strive to collaborate with potential investors and partners from transportation agencies, local governments, and service institutions to integrate their assistive indoor navigation technology into large infrastructure hubs.

SAT-Hub’s goal is to update existing infrastructure with its assistive technology in order to make it more accessible through minimal change.

The consortium’s principal innovation is the Assistive Sensor Solutions for Independent and Safe Travel (ASSIST) app. The app, which has received funding and support from the National Science Foundation, integrates smart infrastructure with sensing and computing technology to build off the SAT-Hub’s integral goal of providing navigation assistance to users with disabilities.

“The societal need is significant, especially impactful for people in great need, such as those who are blind or have low vision, and those who have autism spectrum disorder, and those with challenges in finding places, particularly persons unfamiliar with metropolitan areas.”

“The SAT-Hub will be an exemplar case to extend the reach of their mobility, give them more freedom, allow them to enhance the richness and quality of their lives, and improve their employment opportunities.”

Most recently, led by lab member Arber Ruci and Jin Chen, ASSIST was spun off into Nearabl, an indoor navigation app soft-launched last November.

The basis of ASSIST and its successor is indoor navigation, an area of research that was mostly reserved for autonomous robots in the past.

Over time, however, Dr. Zhu says, indoor navigation systems were viewed to have the same potential as GPS for outdoor navigation, and now typically consists of a mobile platform and sensors, such as cameras, lidars, sonars, and inertial measurement units.

Despite the unlimited potential and obvious utility of the technology, Dr. Zhu noted there is a scarce number of resources that would allow researchers to develop a straightforward model of the navigation system without programming, thus making it difficult for potential users, especially people who are blind, to navigate an environment without additional hardware aside from a smartphone.

The foundation of the app is the modeling of environments using cameras and other onboard sensors of smartphones.

The foundation of the app is the modeling of environments using cameras and other onboard sensors of smartphones.

With this technology, the team scans the environment the user needs assistance navigating to create a dynamic 3D model of the area that considers all types of entrances, paths, and exit routes to ensure the user will receive optimal guidance.

Additionally, the interface of the app is carefully programmed to account for the needs of the user, whether it be audio/narration services for blind users or augmented reality to guide users with autism.

“Our solution is a system-engineering approach to a challenging problem in urban transportation that integrates three major components: multilayer live facility modeling tools, hybrid mobile navigation algorithms, and multimodal human-centric interface designs.”

Dr. Zhu noted that, in addition to the work done by SAT-Hub in the lab, they are continuously communicating with potential users of the app to ensure they are addressing all their needs and making it as accessible and user-friendly as possible.

The creation and potential widescale integration of this technology is not without its challenges. In addition to the initial intrapreneurial challenges of bringing the app to market, there are still many hurdles in the data collection for the app, considering it requires intensive scanning of environments to produce dynamic and accurate 3D models.

On the micro-level, Dr. Zhu and his fellow researchers are also constantly improving the technology to ensure the interface is tailored to the user’s needs to optimize its utility.

In addition to updating much of America’s largest infrastructure hubs nationwide, Dr. Zhu and his team hope the technology can be integrated into other and much smaller public facilities like libraries, airports, schools, and office buildings.

These potential expansions are all a part of Dr. Zhu and his team’s goal of integrating this technology on a wide-scale platform to improve the overall quality of life for everyone in New York City and beyond.

“We want to expand the research, all this infrastructure modeling just for navigation, to the overall landscape data, not just for transportation, but for other kinds of sectors of the application.”

Gabriel is a student at the Weissman School of Arts and Sciences at Baruch College, double majoring in journalism and political science. He is also the editor of the Science & Technology section of Baruch College’s independent, student-run newspaper, The Ticker.